In one lifetime, computers have gone from lumbering giants that filled a room to digital spectacles that can record and photograph everything you see. So where will we go next? STEPHEN LEWIS reports.

SEVENTY years ago, the world’s first programmable computer was coughing out its first answers in a top-secret shed in the grounds of Bletchley Park.

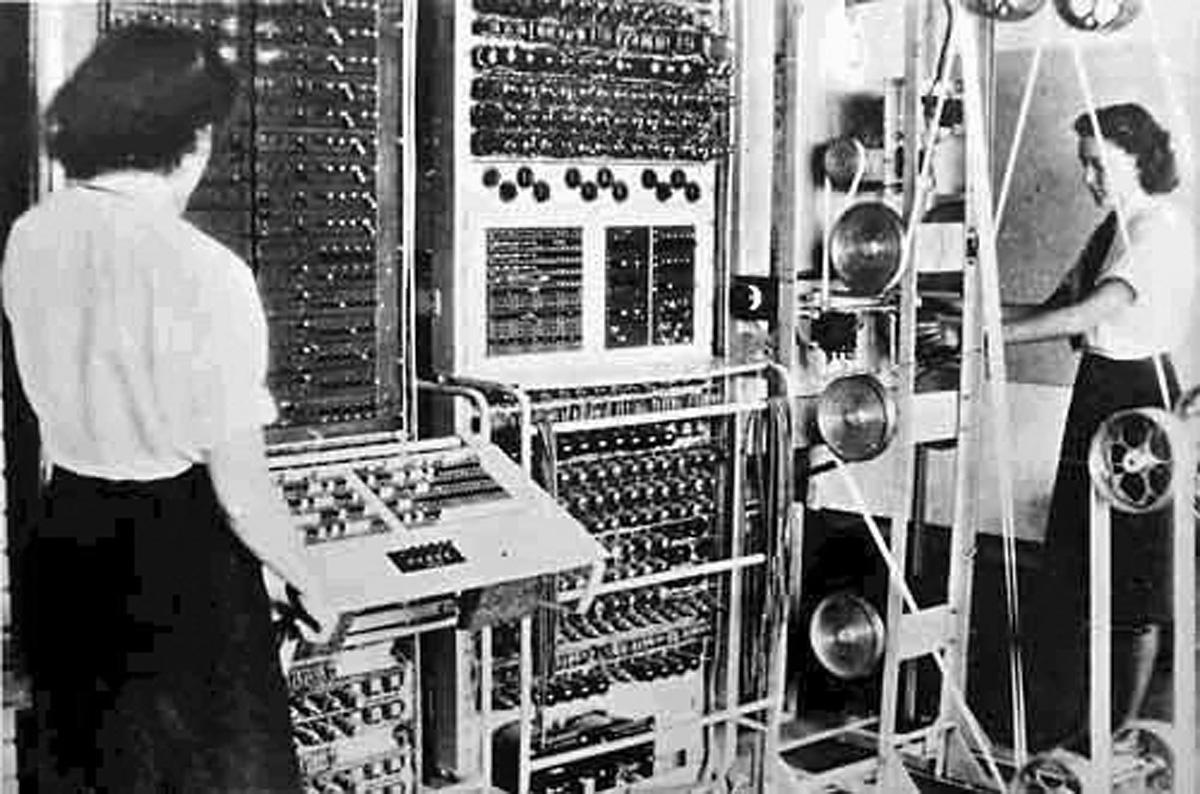

It was called Colossus by those who worked on it – for good reason. The computer consisted of about 1,800 thermionic valves – what the Americans called vacuum tubes. Each looked a bit like a light bulb, and each functioned as an electronic on-off switch – the basis of digital computing.

The entire machine would have filled a small room – and when in operation, each of those thermionic valves would have glowed red hot, says Richard Hind, programme leader of the foundation degree in applied computing at York College.

“It was left running 24 hours a day, so you can imagine how hot it got,” he says. “There were stories of Wrens (female naval personnel) having to work in their underwear!”

Colossus was the brainchild of Tommy Flowers, an engineer with the General Post Office who had been exploring the use of valves to make electronic, as opposed to manual, telephone exchanges.

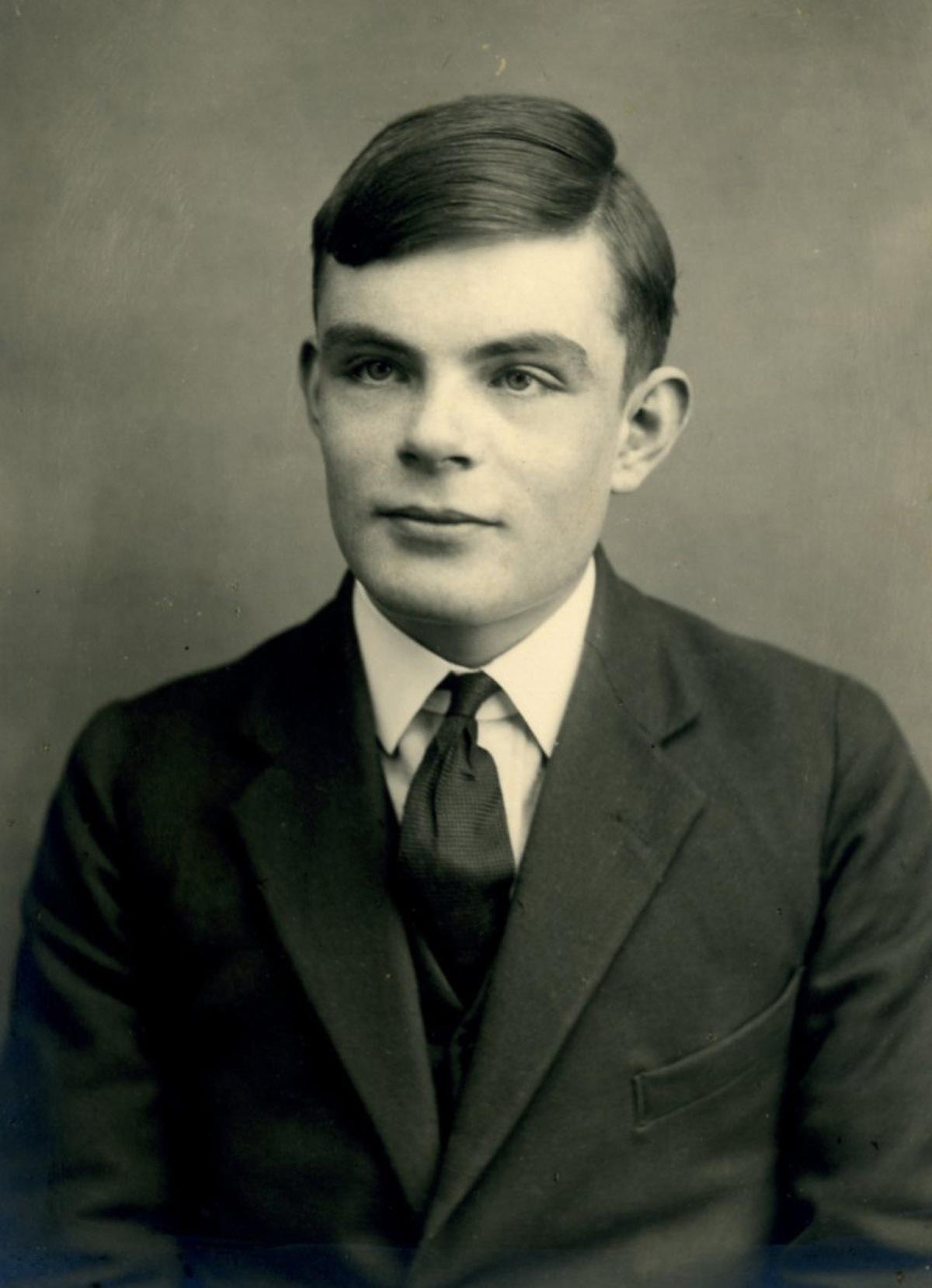

Alan Turing, the mathematician who was working on secret codebreaking projects at Bletchley Park, asked Flowers’ boss for help in designing faster decoders to crack the Germans’ Enigma code.

Instead Flowers came up with Colossus. It was first built by Flowers and his team at the Post Office research centre at Dollis Hill – with Flowers pouring much of his own money into it.

By January 1944 it had been installed at Bletchley Park. Soon after a more advanced computer, the Colossus Mark 2, was up and running – and unknown to the rest of the world, it played a crucial part in the D-Day landings.

Colossus Mark 2 was able to crack the Lorenz cipher. Enigma was a much simpler code, used for German day-to-day battlefield communications, Richard says.

“Lorenz was for Hitler’s top-level communications. It was almost uncrackable.”

Until Colossus came along. It was able to decrypt messages that confirmed that Hitler thought the Allied preparations for the Normandy landings were a diversion – helping Eisenhower to make the decision to go ahead.

The rest is history, as they say – although Colossus was so secret that its part in that history was not known until the 1970s.

Colossus Mark 2 was even bigger than Mark 1, with 2,500 thermionic valves.

It used a paper tape loop to decode key characters in encrypted messages – leaving the remainder of the message to be decrypted manually.

In 70 short years, we have gone from that lumbering, room-sized, cranky collection of 2,500 vacuum tubes to Google Glass – a microcomputer and optical display you wear like a pair of spectacles which will give you directions as you walk around, record what you see and share it instantly over the internet – and take pictures when you say ‘take a picture’.

All that in just one lifetime, says Richard, who will be giving a public lecture on the evolution of computers at York College on March 19.

“We have had programmable computers for 70 years,” the 44-year-old says. “That’s your traditional three score years and ten, the human lifetime. That’s what makes it so amazing. Seventy years ago today there were just a few hundred people that knew about Colossus.

“Today the average home has 100 microprocessors in it. They are everywhere – in your mobile phone, your digital TV, your radio/alarm, your dishwasher, your car – your car probably has half a dozen.”

The sheer speed with which computers have taken over our lives has been astonishing, and it is down to an explosion in computing power.

Since the development of transistors in the early 1960s, the number of transistors that can be packed onto an integrated circuit has doubled every two years.

It is known as Moore’s Law, after Intel co-founder Gordon E Moore, who proposed it in 1965 – and it still holds true today, says Richard.

For decades, in other words, there has been an exponential, year-on-year increase in computing power that has taken us to places those early Bletchley Park pioneers could never have dreamed of.

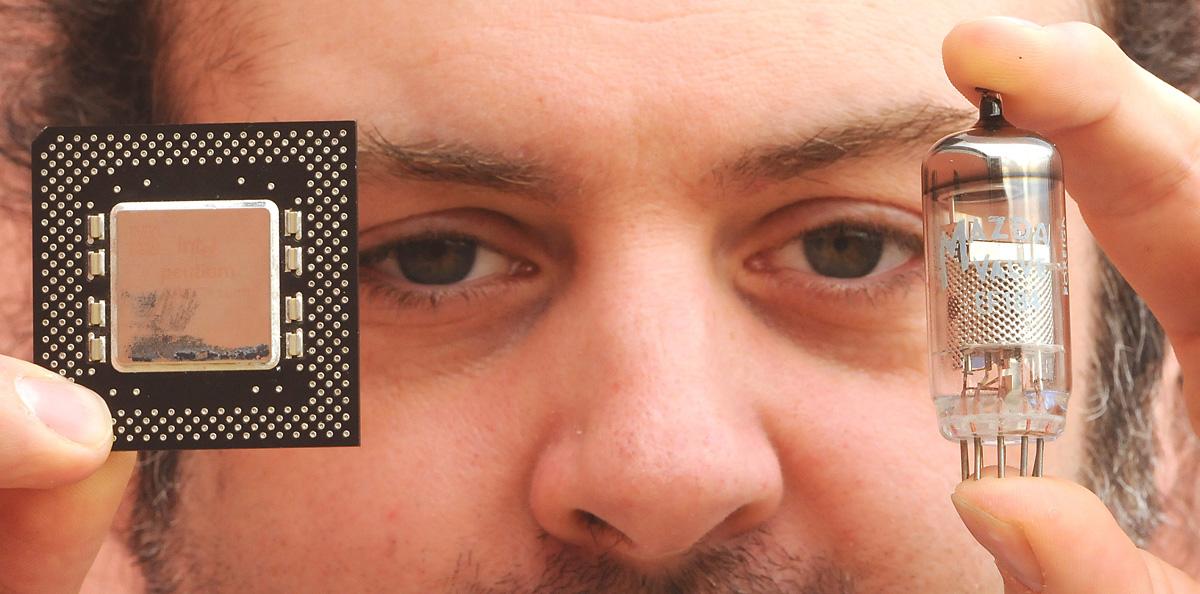

Richard illustrates the point by holding up a single thermionic valve of the kind used in Colossus alongside a 1998 Intel Pentium central processing unit, or CPU.

“It (the CPU) is equivalent to 50 million of these valves,” he says. And the latest microprocessors are many, many times more powerful again.

Niall O’Reilly, a former IT technician who is now, at 28, one of Richard’s students on the foundation degree in applied computing, admits to being awed by the pace of change. It can be difficult to keep up, he admits. At the moment he can’t imagine there being an improvement on his HTC One smartpone. “It has everything I want.”

But then he looks back at his first laptop. “And when you remember that, you think ‘wow’!”

He’s one of those who thinks the explosion in computing power will have to slow down one day – that we will get to the point where the laws of physics dictate we can’t make microprocessors any smaller and faster.

But that could well be some way off, and at the moment, the potential for future developments is astonishing. Google Glass is with us already, as are things like cochlear implants, which use processing power to help the deaf to hear again.

Similar ‘augmentations’ are surely not far off for the eyes and who knows what else.

Richard can also see a time not far off when intelligent bar codes known as RFID tags can be attached to just about everything we make.

“So your fridge will tell you when your milk is out of date; there will be ‘intelligent recycling’ where the tags identify exactly what something is made of and how old it is; and if you drop litter on the street, an intelligent bin will know it was you.”

There are some clear Big Brother implications to all of this, he admits. “We already chip our pets. How long before we start chipping ourselves?”

Another consequence of the digital revolution is the impact on jobs. The teams of men and women employed to carry out arduous mechanical calculations have long ago been replaced; robots have taken the place of workers in many factories; hot-metal printing and the craftsmen who did it are a thing of the past.

With the development of online and e-education, Richard can even see a time when there won’t be much need for teachers.

Then there is the whole issue of Artificial Intelligence (AI). Modern computers are astonishingly fast and complex, but they are still way behind the human brain, at least in complexity.

They are digital, so are based on a simple on-off system, which makes them very black-and white: no room for the fuzzy emotions and subjective perceptions of the human brain. And while they are very fast, they still can’t match the myriad interconnections within the brain.

But one of the aims of AI research is to develop a computer that approximates or approaches to consciousness.

That raises all sorts of moral issues, Richard says. “If you switch it off, would that be murder?”

That’s a question that may well require a better brain than ours to resolve.

• Richard Hind’s public lecture, 70 Years Of Electronic Computing, will be in the lecture theatre at York College at 5pm on Wednesday, March 19. The talk is free and open to all. To find out more, visit eventbrite.co.uk/e/70-years-of-electronic-computing-open-lecture-registration-10098110721

Here’s a couple of computing comparisons

• A modern smartphone has about 4 GB of memory, ie about 4 million KB. The computer which guided the Apollo space missions had about 4KB of memory – one million times less.

• The human brain has 100 billion processing units (neurons), each with about 10,000 connections. It consumes about 20 Watts of power. The world’s most powerful supercomputer, known as Sequoia, has just over one million processors (each processor having around 100 million switches). It consumes 7.8 million Watts of power.

A brief history of scientific advances

• 1700s – First mechanical calculating machines

• 1800s – Babbage designs first mechanical computer

• 1908 – Valves invented

• 1930s – Turing comes up with the concept of a Universal calculating machine, before being recruited to Bletchley Park • 1944 – Colossus becomes the world’s first electronic computer (top secret)

• 1946 – ENIAC becomes the first official computer, built in the US

• 1948 – Transistors invented.

• 1951 – UNIVAC, the world’s first commercial computer, could do about 450 calculations per second. It cost more than $1 million, and 46 were made.

• 1958 – Texas instruments creates the world’s first commercial integrated circuit with 25 transistors on a chip measuring 1cm x 1cm

• 1960s – Transistors replace valves, computers become faster, smaller, more reliable and cheaper

• 1969 – The first version of the internet appears (known as the Arpanet)

• 1970 – Invention of the microprocessor by Intel, the first one has 2,300 transistors, the same number of switches as Colossus Mk II. It measures about 2cm 1cm x 0.5 cm

• 1980 – The Sinclair ZX80 starts the home computer revolution in the UK. Costing under £100, the processor ran 50 per cent faster than UNIVAC.

• 1981 – IBM launches the PC. Each cost abut £2,500, used floppy disks and a text-only interface.

• 1984 – The Apple Mac is launched

• 1985 – Microsoft Windows

• 1991 – The World Wide Web appears, based on an idea from Sir Tim Berners-Lee

• 2003 – Probably the world’s first smartphone (made by Blackberry).

Comments: Our rules

We want our comments to be a lively and valuable part of our community - a place where readers can debate and engage with the most important local issues. The ability to comment on our stories is a privilege, not a right, however, and that privilege may be withdrawn if it is abused or misused.

Please report any comments that break our rules.

Read the rules here